Dolby Vision (Legacy)

Dolby Vision is a version of High Dynamic Range (HDR) video by Dolby Laboratories. HDR video takes advantage of today’s modern displays with the ability to show more dynamic luminance and color range than previous standards.

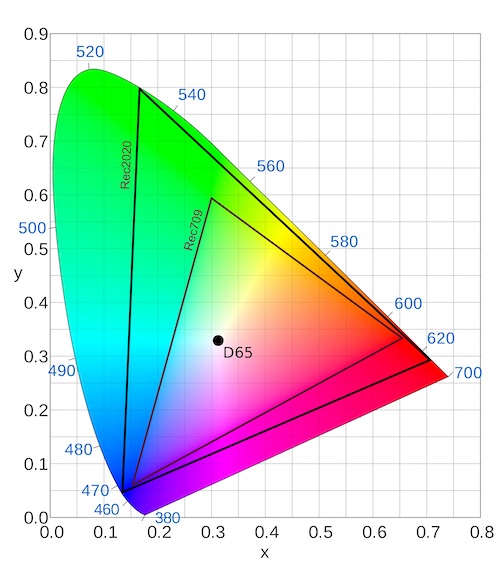

Here is a visual representation of how much more color information can be displayed in HDR video (Rec 2020) compared to traditional High Definition (Rec 709) video.

High Dynamic Range video also targets a much higher luminance; while a typical HD display is capable of displaying 100-400 nits of peak brightness, many HDR displays can display upwards of 1500 nits. The standard is capable of supporting displays up to 10,000 nits in the future.

Apple made a nice primer video on the differences between HD and HDR video, as well as the differences between HDR10 and Dolby Vision. Watch Apple’s Tech Talk

HDR10 vs Dolby Vision

HDR10 defines static metadata for the entire length of the video, with information such as maximum and average luminance values as well as original mastering display information. This allows the playback display to map the video’s levels to it’s own capabilities. For HDR10, this metadata is static and doesn’t change over the course of the video.

For Dolby Vision, this data is dynamic and can be specified per shot, per scene, or per frame. It also encompasses even more information than the HDR10 metadata. While an HDR10 video source typically has its metadata encoded into the video, a Dolby Vision Mezzanine file can have the dynamic metadata either embedded in the video container or as a sidecar file.

Dolby Vision brings brighter images, greater contrast, better highlights and more color. It also brings more consistency across displays as it considers not just the content itself but also the capabilities of the display the image is being rendered on.

More details on Dolby Vision:

Dolby Vision Encoding Targets Profiles and Levels

There are several HEVC variants of Dolby Vision depending on the needed application. Read in depth about this in the Dolby Vision Profiles and Levels Specification.

Some examples are:

- Profile 5 (not-cross compatible to HDR10 or SDR)

- Profile 8.1 (cross-compatible to HDR10)

- Profile 8.2 (cross-compatible to SDR)

At this time, Hybrik only supports Profile 5 and 8.1. 8.1 support is limited to a single output per job. Profile 5 can output multiple layers. See the example jobs.

Dolby Vision Sources for Hybrik

While Dolby has recommendations and best practices for Dolby Vision mastering, by the time you get to Hybrik you will need a mezzanine source. The following forms are supported in Hybrik.

You will need the following to encode Dolby Vision in Hybrik:

- Dolby Vision Mezzanine File

- JPEG 2000 or Apple ProRes video codec

- Rec2020 or DCI-P3 color space in either PQ (Perceptual Quantization) or HLG (Hybrid Log Gamma)

- Dolby Vision Metadata

- Metadata can be a sidecar file or interleaved in the mezzanine file (see input formats below)

Input formats

Depending on the format, Dolby Vision metadata can either be intereaved into the source file or be separate as a sidecar file. Possible formats for Hybrik are:

- MXF with interleaved metadata

- MXF with sidecar metadata XML

- ProRes with sidecar metadata XML

- MXF with video track only and sidecar MXF with metadata only

Hybrik can also accept these formats when packaged as an IMF. Read our Sources tutorial to learn more about specifying a CPL (Composition Playlist) source.

NOTE: We do not support a “complex” CPL, that is a CPL with multiple segments. Your CPL may have an entrypoint > 0 (and Hybrik will respect the offset) but you may not use a CPL that has more than one segment.

Netflix has a bunch of open source content available. We recommend using Meridian for testing; they posted the assets in a Tech Blog Post.

Getting Setup for Dolby Vision encoding in Hybrik

Due to the complexity of the encoding and number of steps, Dolby Vision jobs are more complicated than an average transcode job in Hybrik. You need to have a few things configured in your account to get started.

Computing Groups

There are several steps that occur during a Dolby Vision encode. We recommend that you configure separate computing groups for these tasks. Our initial recommendations are to set up the following groups with the suggested tags. In the example jobs, these two groups are used for multiple tasks:

| Mandatory Tags | Suggested AWS Instance Type | Group Type |

|---|---|---|

| dolby_vision_preproc | i3.xlarge |

Spot |

| dolby_vision_encode | c4.4xlarge |

Spot |

If you need more details on configuring computing groups, read our Tagging Tutorial.

Long Running Encodes

If you are doing long-form Dolby Vision encodes with multiple layers, you will want to configure an additional group. The Dolby Vision Compiler task keeps an eye on spawned child jobs and tracks progress. If this instance experiences a spot takeaway, progress is lost and child jobs are re-spawned. We recommend running the compiler on a cheaper on-demand instance. This is typically not an issue for shorter content. If you’re just starting Dolby Vision testing, you can skip creating this group for now and create a compiler group later if you experience problems with spot takeaways.

| Mandatory Tags | Suggested AWS Instance Type | Group Type |

| dolby_vision_compiler | c4.large |

On Demand |

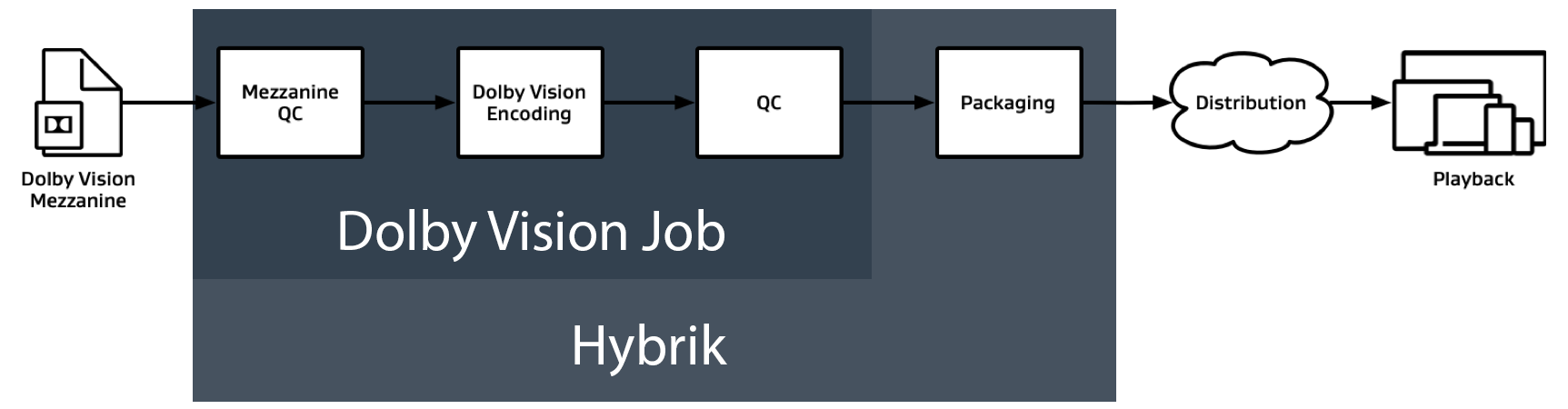

Lifecycle of The Dolby Vision Job in Hybrik

Following the sample job linked at the bottom of this tutorial, there are several steps that a Dolby Vision job goes through. The sample Dolby Vision job processes the input Mezzanine and does the encoding and QC. Hybrik is also capable of packaging for HLS, DASH, or Microsoft Smooth Streaming. We’ll walk through the job in order.

QC Checks

In the mezzanine_qc task, the metadata associated with the Dolby Vision master is validated. If invalid metadata is found, the job will fail. This step is optional and may be removed if desired, but it can prevent a failure later in the job.

{

"uid": "mezzanine_qc",

"kind": "dolby_vision",

"task": {

"name": "Mezzanine QC",

"tags": "{{mezzQC_tags}}"

},

"payload": {

"module": "mezzanine_qc",

"params": {

"location": {

"storage_provider": "s3",

"path": "{{destination_path}}"

},

"file_pattern": "mezz_qc_report.txt"

}

}

},

Audio Encoding

If you wish to mux audio into your Dolby Vision mp4, our Dolby Vision job expects that your audio has already been transcoded into an elementary stream. While this is not required (you can have a video-only mp4), we use a separate transcode task to generate this audio.

Here is a sample transcode elementary audio target, this is included in the sample job:

{

"file_pattern": "audio_192kbps_elementary.ac3",

"existing_files": "replace",

"container": {

"kind": "elementary"

},

"audio": [

{

"codec": "ac3",

"bitrate_kb": 192,

"channels": 2,

"source": [

{

"track": 1

}

]

}

]

}

In the case of creating an Adaptive Bitrate ladder (ABR), you may wish to output your audio as a stand-alone mp4 instead.

The Dolby Vision Task

After the Mezzanine QC is complete, the job flows to the dolby_vision task.

In Hybrik, the dolby_vision task has several steps. We’ll examine the 720p encode for our example. Let’s start at the top of the task:

{

"uid": "dolby_vision_1280x720",

"kind": "dolby_vision",

"task": {

"retry_method": "fail",

"tags": "{{compiler_tags}}",

"name": "Encode - 1280x720",

"source_element_uids": [

"source"

]

},

In this block, we simply define our task and tell it which tags to use (to specify the computing group and tell it to reference the source file element with uid of source).

Preprocessing

In this snippet, we simply define our profile, tell the task which output location to use, and tell it which tags to use for this task. The tags are used to define which computing group to run on. We recommend the i3.xlarge EC2 instance type as a starting point for the preprocessing step.

Next, we define the payload of the task. In this we tell the task to use Profile 5 (the only profile currently supported in Hybrik), where to write our files, and which tags to use for preprocessing.

"payload": {

"module": "encoder",

"profile": 5,

"location": {

"storage_provider": "s3",

"path": "{{destination_path}}"

},

"preprocessing": {

"task": {

"tags": "{{preproc_tags}}"

}

},

In the preprocessing step, the pre-processor converts the signal to the appropriate color space, scales the signal, and calculates Dolby Vision specific metadata and the data needed for Dolby Vision playback devices. This pre-processor metadata can be reused across outputs sharing the same resolution.

For example, if you want to have two 720p encodes at different bitrates, they can share the same pre-processor data. If you also want to encode a 1080p target, the pre-processor will generate a new set of metadata for that resolution. It is for this reason that we define each resolution as a stand-alone dolby_vision Task within our job.

Transcoding

In our transcodes array, we can specify the output encoding specifications for this resolution.

For efficiency in encoding, we utilize segmented_rendering to divide the the media into segments that are encoded in parallel across multiple machines. If you’re not familiar with this feature, see our Segmented Rendering tutorial.

"transcodes": [

{

"uid": "transcode_0",

"kind": "transcode",

"task": {

"tags": "{{transcode_tags}}"

},

"payload": {

"options": {

"pipeline": {

"encoder_version": "hybrik_4.0_10bit"

}

},

"source_pipeline": {

"options": {

},

"segmented_rendering": "{{segmented_rendering}}"

},

If you are familiar with Hybrik transcode tasks, you will recognize that each output is defined in its own transcode task within this array. We cannot define multiple targets within this transcode task as we can in a typical Hybrik transcode task.

You may configure your HEVC encoding details here; the defaults exist in the sample job.

This step outputs an elementary HEVC stream which will later be muxed with the audio to create our final mp4.

"targets": [

{

"file_pattern": "{{output_basename}}_1280x720_1200kbs.h265",

"existing_files": "replace",

"container": {

"kind": "elementary"

},

"nr_of_passes": 1,

"slow_first_pass": true,

"ffmpeg_args": "-strict experimental",

"video": {

"codec": "h265",

"preset": "{{transcode_preset}}",

"tune": "grain",

"profile": "main10",

"width": 1280,

"height": 720,

"bitrate_kb": 1200,

"max_bitrate_kb": 1440,

"vbv_buffer_size_kb": 960,

"bitrate_mode": "vbr",

"chroma_format": "yuv420p10le",

"exact_gop_frames": 48,

"x265_options": "concatenation={auto_concatenation_flag}:vbv-init=0.6:vbv-end=0.6:annexb=1:hrd=1:aud=1:videoformat=5:range=full:colorprim=2:transfer=2:colormatrix=2:rc-lookahead=48:qg-size=32:scenecut=0:no-open-gop=1:frame-threads=0:repeat-headers=1:nr-inter=400:nr-intra=100:psy-rd=0:cbqpoffs=0:crqpoffs=3"

}

}

]

}

}

],

Post-Transcode steps

Once the elementary HEVC bitstream is created, the post_transcode steps mux our audio and video together into an mp4. The mp4_mux is where you would name your output mp4 for this transcode. Using source_basename will have this task assume the name of the hevc elementary stream passed into this step.

Adding additional elementary_streams will allow you to mux in multiple audio tracks - they just need to exist before the dolby_vision task starts.

"post_transcode": {

"task": {

"tags": "{{post_transcode_stage_tags}}"

},

"mp4_mux": {

"enabled": true,

"file_pattern": "{source_basename}.mp4",

"elementary_streams": [

{

"asset_url": {

"storage_provider": "s3",

"url": "{{destination_path}}/audio_192kbps_elementary.ac3"

},

"cli_options": {

}

}

],

"qc": {

"enabled": true,

"location": {

"storage_provider": "s3",

"path": "{{destination_path}}/hybrik_temp"

},

"file_pattern": "{source_basename}_mp4_qc_report.txt"

}

}

}

}

},

We can also enable a QC check which will validate the Dolby Vision signaling in the mp4 output. For more information, refer to Dolby Vision Streams Within the ISO Base Media File Format.

Once you submit the job, Hybrik will run through all of the steps to create the Dolby Vision encode. You will notice that the “base” or “parent” job will spawn “child” jobs for each dolby_vision task defined in your job. If any of the child jobs fail, the parent job will eventually fail too.

Hints and Tips

Running a full ABR ladder over a long video will take a long time, have significant costs, and require many transcoding instances. It is recommended to utilize a low segmented_rendering duration (60-300 seconds) and have hundreds of machines available to process this volume of content.

Hybrik Dolby Vision jobs are resource intensive and can take a long time. Currently we do not have the ability to restart a job part way through. We recommend trimming your source and encoding a single target to begin testing and get your job working piece by piece.

Examples

Dolby Vision Profile 5

- Dolby Vision Profile 5 short IMF single video example

- Dolby Vision Profile 5 full IMF ABR video example

- Dolby Vision Profile 5 full IMF ABR video and audio example

- Dolby Vision Profile 5 short embedded metadata example